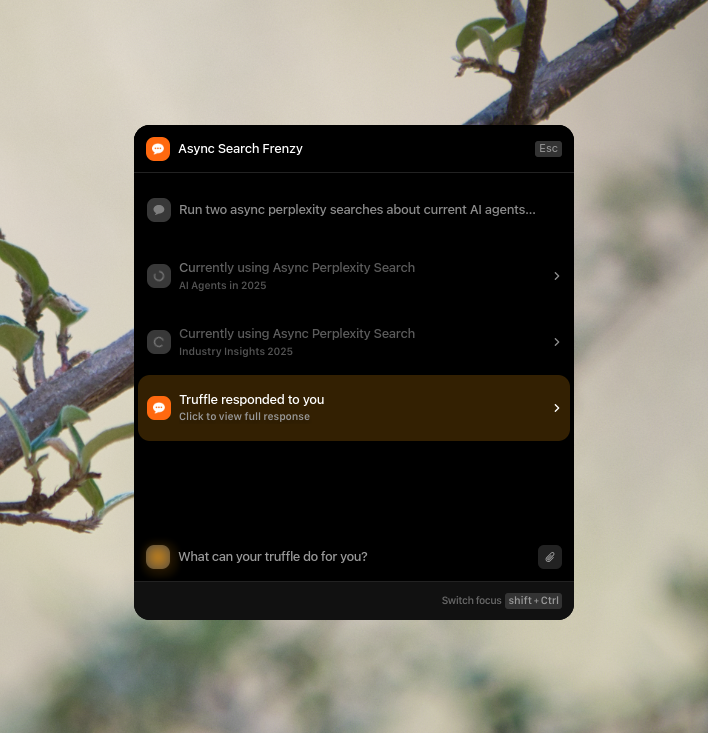

Async tools in Truffle

Making your tool async allows the model to run it as a background task. This is especially useful for long-running processes, such as waiting on a slow API or performing inference. Results from async tools will automatically be appended to the context, making the experience seamless. You could, for example:- Call multiple slow SQL databases

- Run a deep research API call while continuing with other tasks

- Or even launch several deep research tasks in parallel

nonblock flag to @truffle.flags before the function definition: @truffle.flags(nonblock=True)

Async Search

The default Chat app comes with an async search tool, test it out!

The app might respond to the user before the async task is finished. Once the tool finishes, its result is injected into the context, but the agent won’t proactively follow up, it will wait for your next request.